The AI revolution isn’t slowing down—if anything, it’s accelerating at the silicon level. From Arm’s expanded on-device AI initiative to Tesla’s chip manufacturing push and Intel’s new data-center GPU, the hardware race behind artificial intelligence continues to reshape global tech. Here’s a breakdown of the biggest AI hardware and infrastructure stories this week, and what they mean for creators, gamers, and professionals alike.

1. Arm Brings AI to the Edge

Arm Holdings has expanded its Flexible Access licensing program to include its latest Armv9 edge-AI platform, opening doors for startups and device manufacturers to design AI chips more affordably.

More than 300 companies are already using Arm’s open design ecosystem, which helps developers integrate neural processing directly into mobile and IoT devices.

Why it matters:

This move pushes AI beyond the cloud and into your hands—literally. Expect a new wave of AI-powered phones, cameras, and embedded systems capable of real-time inference without relying on massive servers.

For PC enthusiasts:

While this trend focuses on low-power chips, it reinforces the broader theme: compute is decentralizing. The same efficiency and inference acceleration techniques are also improving desktop GPUs and AI PCs, making next-gen consumer hardware smarter and more efficient.

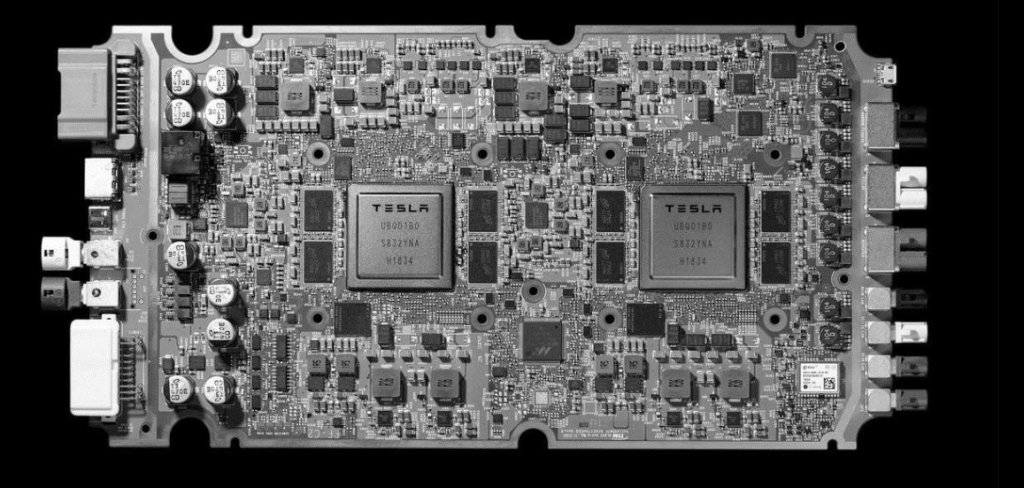

2. Tesla Teams with Samsung and TSMC for AI Chips

Elon Musk confirmed that Tesla’s upcoming AI5 chip—designed to power autonomous vehicles and training clusters—will be manufactured by both Samsung in Texas and TSMC in Arizona.

Musk clarified Tesla isn’t trying to replace NVIDIA, but wants to ensure long-term supply and reduce dependency on a single vendor.

Why it matters:

This is part of a growing trend where large OEMs build their own silicon to optimize cost, energy, and performance. Similar vertical integration may ripple across industries—from automakers to robotics firms—driving long-term demand for GPUs and AI accelerators.

Newegg takeaway:

AI workloads extend far beyond data centers. As companies like Tesla build dedicated compute hardware, consumers and prosumers will look for AI-ready Gaming PCs and ABS workstations capable of model fine-tuning, simulation, and edge training.

3. Broadcom Secures Massive OpenAI Hardware Deal

Broadcom’s stock surged after announcing a multi-year contract with OpenAI to deliver next-generation AI accelerators and networking solutions.

The plan involves deploying roughly 10 gigawatts of AI compute using Broadcom-designed silicon by 2029.

Why it matters:

The partnership highlights the growing importance of high-bandwidth networking and optical interconnects—areas where GPUs alone aren’t enough. AI now demands full-stack infrastructure: compute, connectivity, and cooling.

For enterprise and creators:

The same trend trickles down to advanced workstations. Expect upcoming AI desktops to feature PCIe 5.0/6.0 support, liquid-cooling loops, and multi-GPU architectures inspired by hyperscale data-centers.

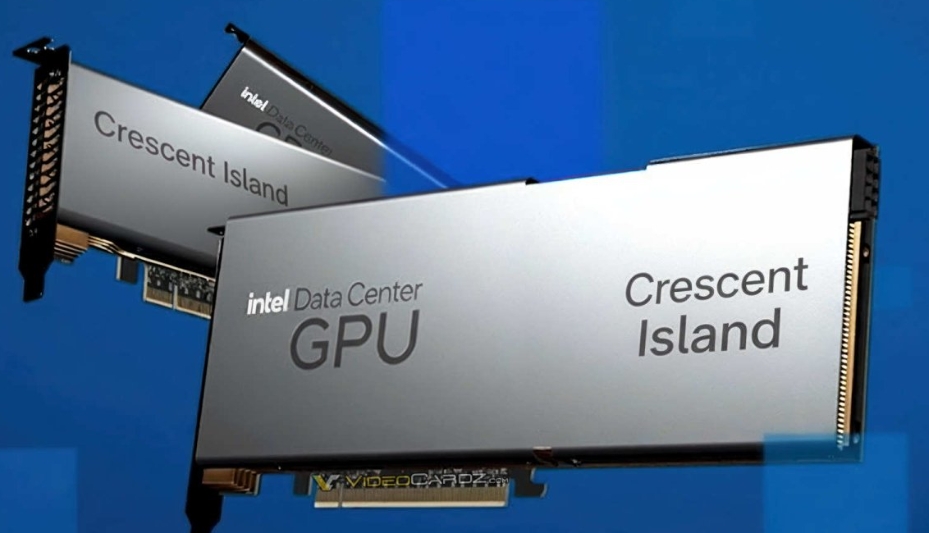

4. Intel Unveils “Crescent Island” AI GPU

Intel introduced its next-generation data-center GPU, code-named Crescent Island, at the 2025 OCP Global Summit.

The new architecture targets AI inference workloads—offering higher memory capacity and efficiency for real-time applications like chatbots, recommendation systems, and image analysis.

Why it matters:

Inference—running AI models after they’re trained—is now overtaking training as the largest source of compute demand. Crescent Island is Intel’s answer to NVIDIA’s dominance in this space.

For workstation builders:

Expect Crescent Island-based GPUs to appear in professional AI PCs and render workstations—perfect for data scientists, 3D artists, and developers running local models.

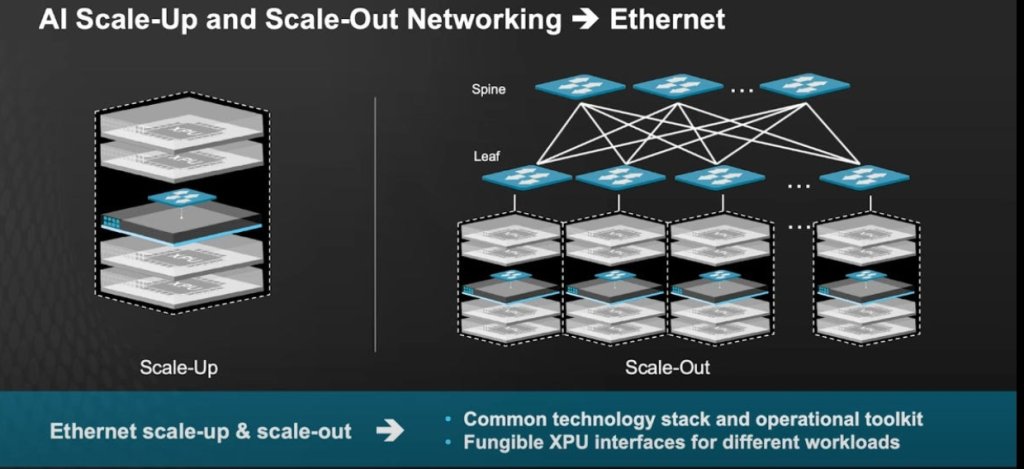

5. Infrastructure Innovation: Networks, Cooling, and Power

At the Open Compute Project (OCP 2025), industry leaders including Meta, AMD, and Broadcom showcased advances in disaggregated networking, optical interconnects, and liquid-cooling infrastructure for large AI clusters.

These designs aim to maximize GPU utilization while reducing power waste.

Trend insight:

In the AI era, performance bottlenecks often lie outside the chip—network throughput, chassis thermals, and rack design are just as critical.

Future AI-ready systems, even for creators and small businesses, will borrow from these innovations: high-efficiency PSUs, advanced airflow, and AI-optimized networking cards.

6. AI Is Getting Smaller, Cheaper, and Faster

The Stanford AI Index 2025 reports that the cost of inference for GPT-3.5-class models has dropped nearly 280× since 2022, with hardware efficiency improving by 40% per year.

This cost curve is democratizing AI—what once required a supercomputer now runs on a workstation or even a laptop GPU.

Why it matters:

Expect a wave of local-AI tools and on-device copilots that rely on efficient consumer GPUs and CPUs.

For Newegg readers, this means your next PC build might double as your personal AI development platform.