ABS Zaurion Pro Workstation - Powered by aiDAPTIV+ & Ubuntu

- Cost-Effective, On-Premise LLM Training

- 80B – 90B Parameter LLM inference

- Fine-Tuning for 70B+ Parameter LLMs

Next-Level AI Infrastructure for Training and Deployment

What is the ABS AI Workstation?

The ABS AI Workstation, powered by PHISON aiDAPTIV+, is a high-performance, scalable AI infrastructure solution designed for cutting-edge generative AI workloads. Supporting both large-scale inference and full-parameter training, it can handle large language models (LLMs) with up to 200B parameters.

Unlike traditional AI PCs or entry-level AI devices, ABS delivers enterprise-grade computing power, featuring advanced memory architecture and multi-GPU scalability. Built for AI labs, research institutions, and forward-thinking enterprises, it provides the efficiency, flexibility, and performance needed to accelerate AI development and deployment.

Key Benefits

Scalable Inference & Fine-Tuning

- Train and deploy Llama-3-70B, Stable Diffusion XL, Whisper, and more.

- Supports both full-model training and domain-specific fine-tuning.

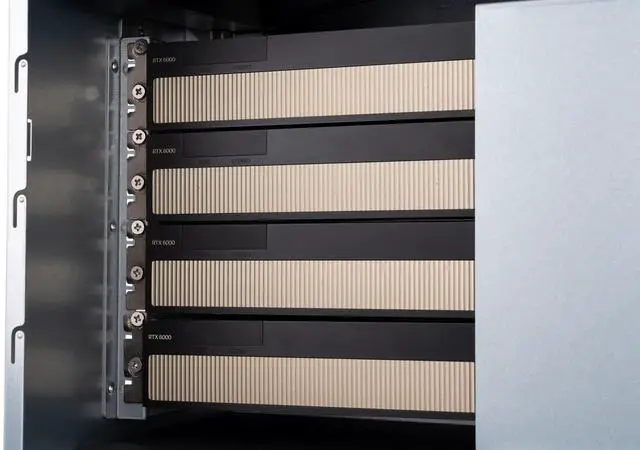

High-Performance Multi-GPU Architecture

- Equipped with 4x 48GB GPUs for demanding AI workloads.

- Scales seamlessly for 13B–200B parameter model training.

Advanced Memory Expansion

- aiDAPTIV+ architecture overcomes HBM limitations.

- Expands GPU memory with NAND for improved efficiency.

Secure On-Premise AI Deployment

- Keep data, models, and IP secure within local infrastructure.

- Customize AI for specific languages, industries, and datasets.

Who Needs AI Workstation?

Advanced Research and Academia

- Provides comprehensive curriculum support for large-scale generative AI education.

- Empowers AI research labs with cutting-edge model development and experimentation.

Enterprise AI Deployment

- Develop and deploy domain-specific AI solutions for healthcare, finance, legal, and retail industries.

- Customize LLMs to adapt to regional languages and regulatory requirements.

Software and Application Development

- Train and deploy chatbots, AI co-pilots, document assistants, and multi-modal applications.

- Accelerate the development of commercial AI-powered platforms.

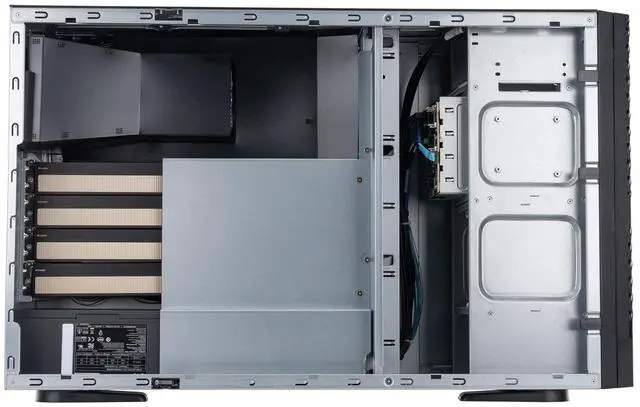

System Highlights

- CPU: Intel Xeon

- GPU: 4x NVIDIA RTX 6000 Ada (48GB each)

- Memory: 512GB DDR5 ECC

- Storage: High-speed NVMe SSDs + aiDAPTIV+ AI100E Modules

- Middleware: aiDAPTIVLink for seamless AI training, optimization, and fine-tuning

Supported Models

- Llama, Llama-2, Llama-3, CodeLlama

- Vicuna, Falcon, Whisper, Clip Large

- Metaformer, Resnet, Deit base, Mistral, TAIDE

- And many more being continually added

Why Choose the ABS AI Workstation?

- Scalable AI Training – Supports 13B to 200B models with multi-GPU flexibility.

- Privacy-First Design – Secure on-premise model training and inference.

- Breakthrough Memory Innovation – Overcomes HBM limitations with NAND memory extension.

- Education-Ready – Ideal for academic AI research and training.

- Enterprise-Grade Deployment – Optimized for production-ready AI applications.

A Good Way to Harness the Full Potential of AI

The ABS AI Workstation is the foundation of next-generation AI infrastructure, delivering unparalleled compute power, innovative memory solutions, and seamless deployment options. Whether you're training future AI leaders or deploying enterprise-grade LLMs, provides the flexibility, security, and performance needed to drive real-world AI innovation.