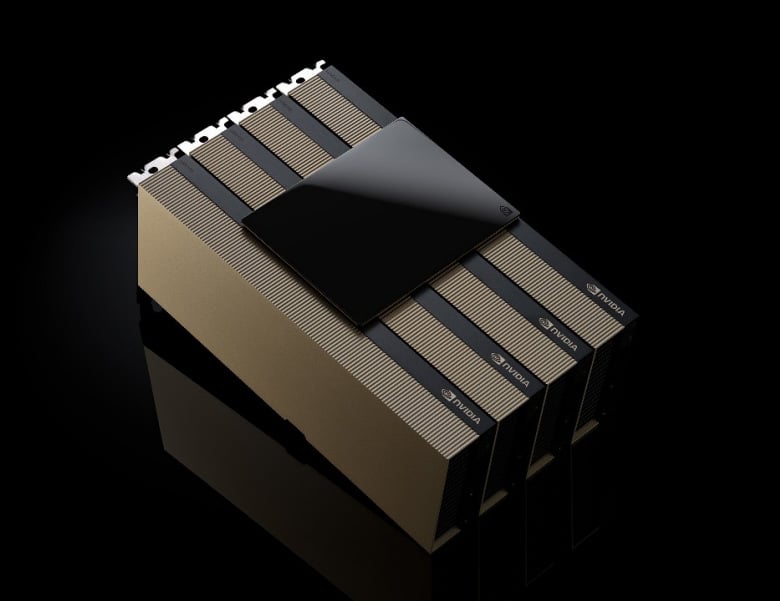

NVIDIA H200 NVL

AI Acceleration for Mainstream Enterprise Servers

NVIDIA H200 NVL is ideal for lower-power, air-cooled enterprise rack designs that require flexible configurations, delivering acceleration for every AI and HPC workload regardless of size.

Experience Next-Level Performance

Memory

1.5X Larger and 1.2X Faster

Large language model (LLM) inference

1.7X Faster

High-Performance Computing

1.3X Faster

* Compared with the H100 NVL.

Maximize System Throughput

The latest generation of NVLink provides GPU-to-GPU communication 7x faster than fifth-generation PCIe — delivering higher performance to meet the needs of HPC, large language model inference and fine-tuning.

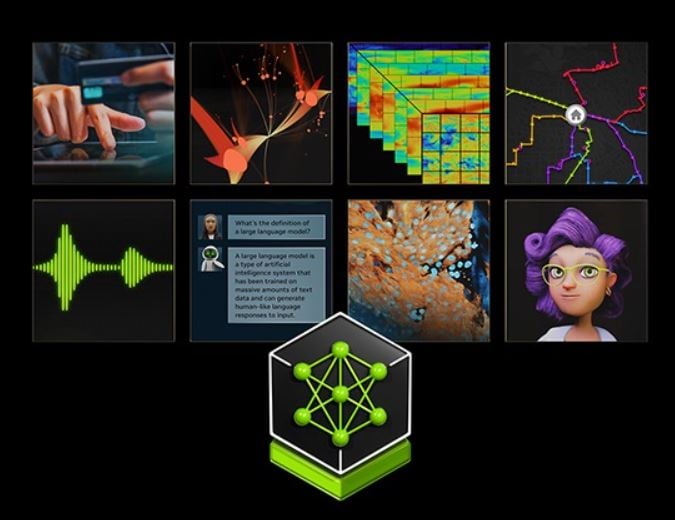

Enterprise-Ready

The NVIDIA H200 NVL is paired with powerful software tools that enable enterprises to accelerate applications from AI to HPC. It comes with a five-year subscription for NVIDIA AI Enterprise, a cloud-native software platform for the development and deployment of production AI. NVIDIA AI Enterprise includes NVIDIA NIM microservices for the secure, reliable deployment of high-performance AI model inference.

Reduce Energy

With the H200 NVL, energy efficiency reaches new levels. AI factories and supercomputing systems that are not only faster but also more eco-friendly, deliver an economic edge that propels the AI and scientific community forward.