As artificial intelligence moves deeper into professional workflows, one hidden cost keeps surfacing across industries: waiting. Teams wait for models to train. Engineers wait for simulations to finish. Data scientists wait for massive datasets to load. Over time, these delays quietly drain momentum and slow innovation more than most organizations realize.

For years, professionals have faced an uncomfortable compromise. They either iterate slowly on a local machine that struggles to keep up, or they push workloads into distant data centers and accept a fragmented workflow. Cloud platforms certainly offer scale. However, they also introduce latency, cost, and operational complexity—especially during early experimentation.

Intel’s Xeon 600 processor series for workstations challenges that tradeoff directly. Instead of forcing creators and developers to choose between local speed and cloud-scale power, Intel brings server-class capability into a single-socket workstation. As a result, the distance between the desk and the data center shrinks dramatically. Heavy compute no longer has to live somewhere else.

The Shift from “Fast Enough” to “Always Ready”

AI PCs have made local AI accessible. Built-in accelerators handle transcription, image enhancement, and assistive features efficiently. For everyday productivity, these systems are more than capable.

Professional workloads behave differently. When models run continuously, when simulations last hours, and when datasets grow into the hundreds of gigabytes, performance must remain stable rather than impressive for a moment. In these environments, waiting is not a minor inconvenience. It becomes a structural limitation.

AI workstations exist to remove that friction. They are not defined by a single benchmark or feature. They are defined by how little they slow you down.

The Rise of the 86-Core Single-Socket Workstation

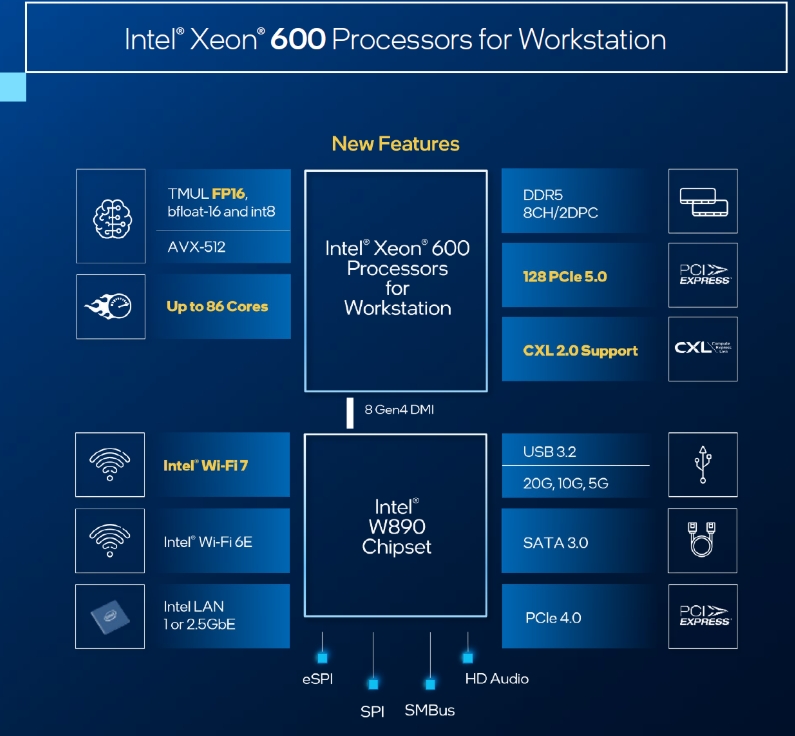

At the heart of the Xeon 600 workstation platform is a fundamental shift in compute density. Intel has brought up to eighty-six cores and one hundred seventy-two threads into a single socket, without relying on hybrid core designs.

Every core in the Xeon 600 series is a performance core. For professionals, this matters more than it sounds. Predictable execution is critical for simulation, rendering, and parallel AI workloads. There are no efficiency cores to manage around, no scheduling surprises under load. What you see is what you get, even hours into a job.

Intel pairs this density with an enormous shared cache. Flagship X-series processors include hundreds of megabytes of L3 cache, dramatically reducing latency for data-heavy operations. When models and datasets can stay closer to the cores, iteration speeds improve in ways that clock speeds alone cannot explain.

For users who need even more control, select Xeon 600 X-series processors are unlocked, opening the door to advanced tuning and optimization in environments where performance margins matter.

When Local AI Development Outgrows the Cloud

Cloud infrastructure plays an essential role in modern AI deployment. But development is a different story. The most sensitive, experimental, and iterative phases of AI work happen locally.

This is where Xeon 600’s integrated AI acceleration becomes strategically important. Each core includes Intel Advanced Matrix Extensions, enabling hardware-level acceleration for matrix operations used across modern AI workloads. These capabilities allow CPUs to handle inference, preprocessing, and certain training tasks efficiently, reducing reliance on GPUs for every step.

Just as importantly, Xeon 600 workstations mirror the architecture of Intel Xeon-based data centers. For developers, this alignment matters. Code built, tested, and refined locally behaves consistently when scaled outward. Using Intel’s oneAPI software ecosystem, teams can move from local experimentation to broader deployment without rethinking their entire pipeline.

Local AI stops being a prototype environment and becomes a true development sandbox.

Memory as the Real Divider Between PCs and Workstations

In AI and data science, memory capacity often defines what is possible long before compute becomes the bottleneck. Consumer systems frequently force developers to design around memory limits, splitting datasets, paging to disk, and restructuring workflows to fit the hardware.

Xeon 600 workstations remove that constraint. With support for up to four terabytes of DDR5 memory across eight memory channels, these systems allow entire datasets to remain resident in memory. That changes how problems are approached.

Developers can iterate without constant I/O delays. Data scientists can explore full datasets instead of samples. Engineers can run complex simulations without simplifying assumptions imposed by hardware limits.

The platform also supports multiple memory technologies, allowing professionals to tune systems for either maximum capacity or higher bandwidth, depending on the workload. In both cases, memory stops being the limiting factor.

A Workstation That Scales Like Infrastructure

Modern AI systems rarely rely on a single accelerator. GPUs, high-speed storage, and networking all play a role. Xeon 600 platforms are designed around this reality.

With extensive PCIe 5.0 connectivity directly from the processor, workstations can support multiple high-end GPUs alongside fast NVMe storage without contention. This enables systems that grow over time, evolving from development machines into production-grade local compute nodes.

Support for Compute Express Link further extends this scalability. By enabling memory-coherent connections between the CPU and external devices, Xeon 600 lays the groundwork for future expansion models where memory and acceleration are no longer confined to the motherboard.

The workstation becomes less like a PC and more like modular infrastructure.

Connectivity That Matches the Compute

As workflows become more distributed, connectivity becomes part of performance. Xeon 600 platforms introduce next-generation wireless and wired options designed to keep data moving.

Support for Wi-Fi 7 enables multi-gigabit wireless speeds that rival traditional wired networks. For hybrid offices and flexible workspaces, this allows large datasets to move quickly without becoming tethered to a desk. Integrated Ethernet options ensure stable, high-throughput connections when wired infrastructure is required.

In either case, the system is designed so that connectivity does not become the bottleneck.

Stability, Security, and the Business Reality

Performance alone does not define professional hardware. AI workstations often live in environments where downtime has real costs. Xeon 600 platforms integrate enterprise-grade manageability and security features through Intel vPro technologies.

Remote management, recovery capabilities, and hardware-level security support allow teams to deploy and maintain systems with confidence. For studios, labs, and enterprises, this stability is as valuable as raw performance.

It is the difference between a powerful machine and a dependable one.

Who This Level of Compute Is Really For

Not every user needs an AI workstation. That is by design.

Xeon 600-based systems are built for professionals whose work is constrained by waiting rather than imagination. Developers training and refining models locally. Data scientists working with massive datasets. Creators running AI-assisted rendering and simulation. Engineering teams pushing the limits of what can be done on a single machine.

For these users, eliminating wait time changes how problems are solved.

The New Definition of the High-Performance Desk

Intel Xeon 600 processors redefine what belongs on a professional desk. By bringing server-class compute density, memory capacity, and expansion into a single-socket workstation, they remove barriers that once separated local systems from data centers.

The result is a shift from being compute-constrained to compute-unleashed. When hardware no longer dictates iteration speed, the scope of what feels possible expands dramatically.

That is the true role of an AI workstation in 2026—not to impress on paper, but to disappear as a limitation.

Building an AI Workstation with Newegg

Designing a workstation capable of supporting modern AI workloads requires more than choosing a powerful processor. Memory configuration, GPU selection, storage architecture, cooling, and platform compatibility all shape long-term performance and reliability.

Newegg offers access to workstation–class processors, professional GPUs, high-capacity memory, and expansion-ready components that allow builders to create systems aligned with real workloads. Whether you are assembling a dedicated AI development machine or upgrading an existing platform, the right choices can turn local compute into a lasting competitive advantage.